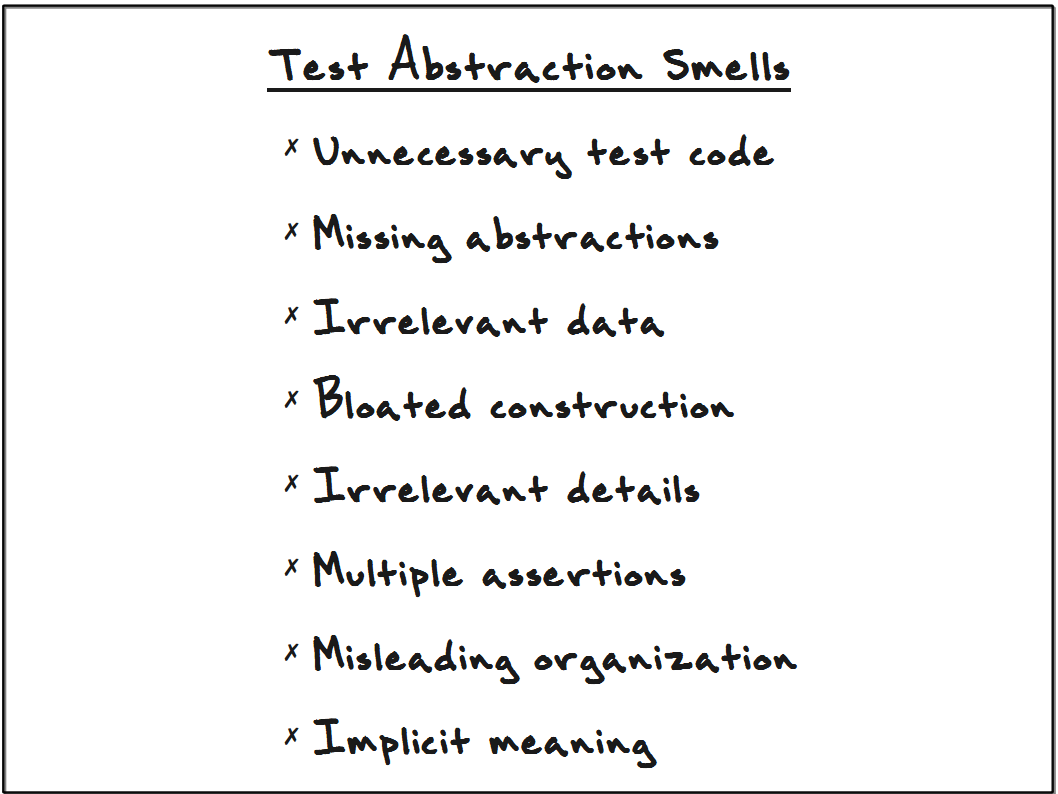

In our article Test Abstraction: Eight Techniques to Improve Your Tests (published by PragPub), we whittled down a convoluted, messy test into a few concise and expressive test methods, using this set of smells as a guide. We improved the abstraction of this messy test by emphasizing its key parts and de-emphasizing its irrelevant details.

Stripped down, the "goodness" of a test is largely a matter of how quickly these questions can be answered:

- What's the point of this test?

- Why is the assertion expecting this particular answer?

- Why did this test just fail?

- Unnecessary test code. Eliminate test constructs that clutter the flow of your tests without imparting relevant meaning. Most of the time, "not null" assertions are extraneous. Similarly, eliminate try/catch blocks from your tests (in all but negative-case tests themselves).

- Missing abstractions. Are you exposing several lines of implementation detail to express test set-up (or verification) that represents a single concept? Think in terms of reading a test: "Ok, it's adding a new student to the system. Fine. Then it's creating an empty list, and then adding A to that list, then B, and ..., and then that list is then used to compare against the expected grades for the student." The list construction is ugly exposed detail. Reconstruct the code so that your reader, in one glance, can digest a single line that says "ensure the student's expected grades are A, A, B, B, and C."

- Irrelevant data. "Say, why does that test pass the value '2' to the SUT? It looks like they pass in a session object, too... does it require that or not?" Every piece of data shown in the test that bears no weight on its outcome is clutter that your reader must wade through and inspect. "Is that value of '2' the reason we get output of '42'?" Well, no, it's not. Take it out, hide it, make it more abstract! (For example, we'll at times use constant names like ARBITRARY_CHARGE_AMOUNT.)

- Bloated construction. It may take a bit of setup to get your SUT in the state needed for the test to run, but if it's not relevant to core test understanding, move the clutter out. Excessive setup is a design smell, showing that the code being tested needs rework. Don't cope with the problem by leaving extensive setup in your test, but tackle the problem by reworking the code as soon as you have reasonable test coverage. Of course, the problem of untestable code is largely eliminated through the use of TDD.

- Irrelevant details. Is every step of the test really needed? For example, suppose your operational system requires the presence of a global session object, pre-populated with a few things. You may find that you can't even execute your unit test without having it similarly populate the session object. For most tests, however, the details of populating the session object have nothing to do with the goal of the test. There are usually better ways to design the code unit, and if you can't change your design to use one of those ways, you should at least bury the irrelevant details.

- Multiple assertions. A well-abstracted unit test represents one case: "If I do this stuff, I observe that behavior." Combining several cases muddies the abstraction--which setup/execution concepts are relevant to which resulting behaviors? Which assertion represents the goal of the test case? Most tests with multiple assertions are ripe for splitting into multiple tests. Where it makes sense to keep multiple assertions in a single test, can you at least abstract its multiple assertions into a single helper method?

- Misleading organization. You can easily organize tests using AAA (Arrange-Act-Assert). Remember that once green, we re-read any given test (as a document of SUT behavior) only infrequently--either when the test unexpectedly fails or when changes need to be made. Being able to clearly separate the initial state (Arrange) from the activity being tested (Act) from the expected result (Assert) will speed the future reader (perhaps yourself in 5 months) on his way. When setup, actions, and assertions are mixed, the test will require more careful study. Spare your team members the chore of repeatedly deciphering your tests down the road--spend the time now to make them clear and obvious.

- Implicit meaning. It's not all just getting rid of stuff; abstraction requires amplifying essential test elements. Imagine a test that says "apply a fine of 30 cents on a book checked out December 7 and returned December 31." Why those dates? Why that amount? If we challenged you to tell us the rules that govern the outcome of this test, you'd likely get them wrong. A meaningful test would need to describe, in one manner or another, these relevant concepts and elements:

- books are normally checked out for 21 days

- Christmas--a date in the 21-day span following December 7--is a grace day (i.e. no fines are assessed)

- The due date is thus December 29

- December 31 is two days past the due date

- Books are fined 20 cents for the first day late and 10 cents for each additional day.

- 30c = 20c + 10c

A unit test that requires you to dig through code to discern the reasons for its outcome is wasteful.