Red, green, refactor. The first step in the test-driven development (TDD) cycle is to ensure that your newly-written test fails before you try to write the code to make it pass. But why expend the effort and waste the time to run the tests? If you're following TDD, you write each new test for code that doesn't yet exist, and so it shouldn't pass.

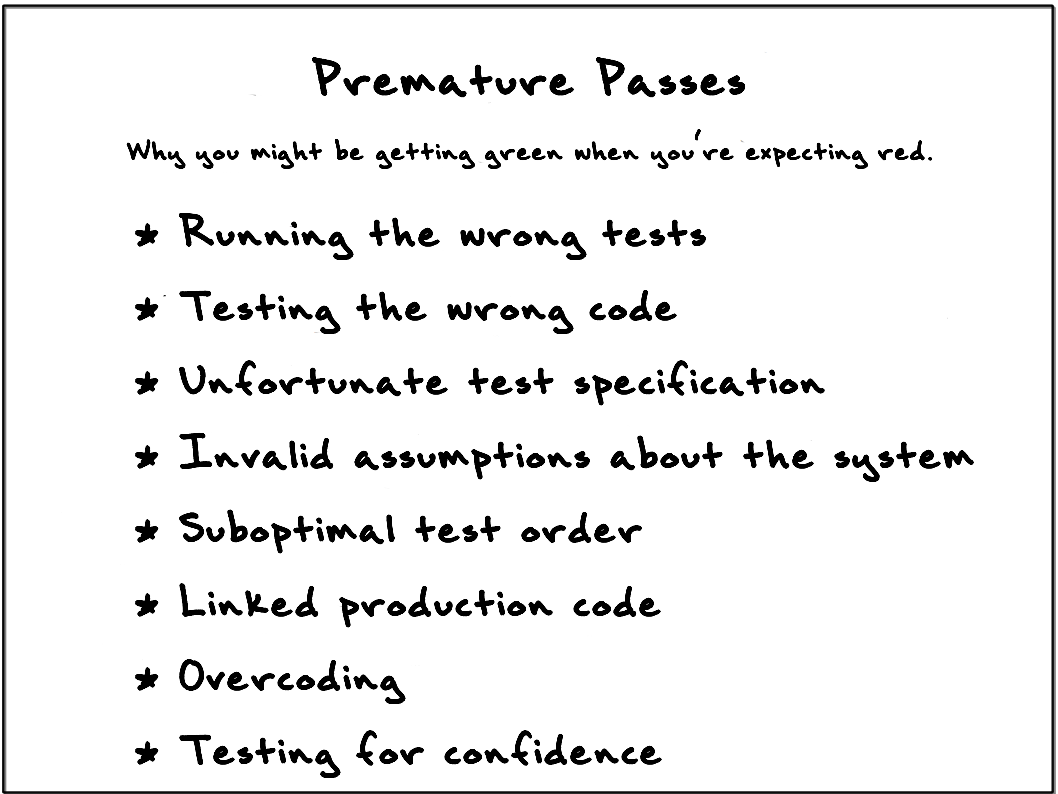

But reality says it will happen--you will undoubtedly get a green bar when you expect a red bar from time to time. (We call this occurrence a premature pass.) Understanding one of the many reasons why you got a premature pass might help save you precious time.

- Running the wrong tests. This smack-your-forehead event occurs when you think you were including your new test in the run, but were not, for one of myriad reasons. Maybe you forgot to compile it, link in the new test, ran the wrong suite, disabled the new test, filtered it out, or coded it improperly so that the tool didn't recognize it as a legitimate test. Suggestion: Always know your current test count, and ensure that your new test causes it to increment.

- Testing the wrong code. You might have a premature pass for some of the same reasons as "running the wrong tests," such as failure to compile (in which case the "wrong code" that you're running is the last compiled version). Perhaps the build failed and you thought it passed, or your classpath is picking up a different version. More insidiously, if you're mucking with test doubles, your test might not be exercising the class implementation that you think it is (polymorphism can be a tricky beast). Suggestion: Throw an exception as the first line of code you think you're hitting, and re-run the tests.

- Unfortunate test specification. Sometimes you mistakenly assert the wrong thing, and it happens to match what the system currently does. I recently coded an

assertTruewhere I meantassertFalse, and spent a few minutes scratching my head when the test passed. Suggestion: Re-read (or have someone else read) your test to ensure it specifies the proper behavior. - Invalid assumptions about the system. If you get a premature pass, you know your test is recognized and it's exercising the right code, and you've re-read the test... perhaps the behavior already exists in the system. Your test assumed that the behavior wasn't in the system, and following the process of TDD proved your assumption wrong. Suggestion: Stop and analyze your system, perhaps adding characterization tests, to fully understand how it behaves.

- Suboptimal test order. As you are test-driving a solution, you're attempting to take the smallest possible incremental steps to grow behavior. Sometimes you'll choose a less-than-optimal sequence. You subsequently get a premature pass because the prior implementation unavoidably grew out a more robust solution than desired. Suggestions: Consider starting over and seeking a different sequence with smaller increments. Try to apply Uncle Bob's Transformation Priority Premise (TPP).

- Linked production code. If you are attempting to devise an API to be consumed by multiple clients, you'll often introduce convenience methods such as

isEmpty(which inquires about the size to determine its answer). These convenience methods necessarily duplicate code. If you try to assert againstisEmptyevery time you assert against size, you'll get premature passes. Suggestions: Create tests that document the link from the convenience method to the core functionality, demonstrating them. Or combine the related assertions into a single custom assertion (or helper method). - Overcoding. A different form of "invalid assumptions about the system," you overcode when you supply more of an implementation than necessary while test-driving. This is a hard lesson of TDD--to supply no more code or data structure than necessary when getting a test to pass. Suggestion: Hard lessons are best learned with dramatic solutions. Discard your bloated solution and try again. It'll be better, we promise.

- Testing for confidence. On occasion, you'll know when you think a test will generate a premature pass. There's nothing wrong with writing a couple additional tests: "I wonder if it works for this edge case," particularly if those tests give you confidence, but technically you have stepped outside the realm of TDD and moved into the realm of TAD (test-after development). Suggestions: Don't hesitate to write more tests to give you confidence, but you should generally have a good idea of whether they will pass or fail before you run them.

- Never skip running the tests to ensure you get a red bar.

- Pause and think any time you get a premature pass.