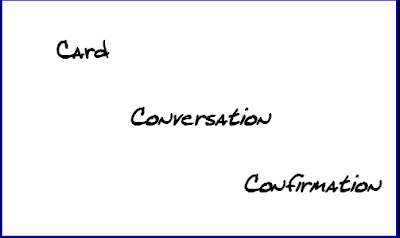

(font is Flourine)

(font is Flourine)This is a dangerous "power" card. This is not the first card one should reach for, and one should not make these changes without some consultation with peers. These tend to

not be the kinds of changes a transitioning team would make for itself. Still, the practices do have merit and can be used to train a team and the organization around it to adopt agile behaviors. These are mostly "trellis" measures, probably best selected by a transition consultant or coach.

You may

shorten iterations to force priority when you find that a team is suffering slump and binge cycle. Teams may slump for a number of days with knowledge that they still have plenty of time to complete a feature, and then cram in overtime at the last minute to complete their work before the deadline. This syndrome is often related to term papers, finals cramming, and amateur theatre rehearsals.

With shorter iterations, the excitement of picking up new work and the immediacy of due dates brings focus. The goal is that each team member goes home happy and tired, and comes back refreshed and ready to work after a full night's sleep. That can work with shorter iterations and shorter days. This extreme measure should be taken to stop overtime, rather than to inspire it.

Mandate that a

subject-matter expert can only help others complete tasks so that expertise will spread through the team. If an expert works alone in her area of expertise, then the team continues to be starved of experience. Worse yet, you may find that work has to queue for the expert and "steel threads" abound. An expert may not want to "own" an area of the application, but the organization may force it to be so. This does bad things to "truck number." It is best if a subject-matter expert not be siloed for the sake of the expert, the team, and the software organization.

You might require that

40% of all work is done at the iteration midpoint to avoid the slump-and-binge cycle listed above, since this practice essentially cuts one iteration into two, with a slightly softer deadline for the first one.

If you

revert or discard old, unfinished work you will cut down on the Work-in-progress (AKA: "waste") of a team. People don't want to do work that isn't going to be deployed, so they tend to old onto unfinished work in hopes of completing it on the sly "when time allows". Sadly, code becomes dated outside of the current line of version control. Code that is old may no longer merge into the codeline. The longer the interval since the last integration, the less likely it is that code can be integrated. It is often better to scrap and rewrite old features. It certainly makes a statement. Overplaying this card causes depression in programmers and outrage in Customers, so be careful with it. Not playing this card can result in risky rework, which is waste. It is better far if the team only takes on the amount of work it can complete and successfully field in an iteration.

Random weekly team rosters will force closure on features if the team is suffering from soft iteration boundaries. Often teams take credit for work not fully completed (for shame!) and will try to quietly squeeze in the completion of hung-over features during the next iteration. Of course, if they couldn't complete 12 points of work last iteration, why do they think they can complete another 12 points of features plus three points of last iteration's work in the same number of days? If the team is gaming the iterations in such a way, create two teams and randomly shift members between the two. It is especially handy if one team is tasked with new features while the other deals with production issues and bugs. If you don't know where you'll be next week, can you afford to let a feature run long? If you have to complete more work than you can do in an iteration, you really must seek help from teammates. You also need someone who can take over if you're shifted into a new team, so you need to pair with several people on your team, just in case. One risks resentment and lowered short-term productivity with this extreme measure, but one can benefit by spreading more code knowledge and by getting more bugs fixed with the second team.

If you find that your team is suffering from "pair marriages" you might need to

stir pairs (at least) twice daily. A bell, loudspeaker announcement, or other mechanism can be used to signal that it's pair-switching time. You might pass a rule that a pair must switch out one partner each morning and after each lunch break. Either way, you can push your team to learn from each other by pairing with someone different for a change. Pair programming was never intended to be conducted by two people chained to one desk for the duration.

It is wise to

eliminate individual work assignments as they create a disincentive against pair-programming. If you have work to complete, and I have work to complete, I am less likely to help you because it will put my work at risk of non-completion. Since my name is on my feature, I will be evaluated on my reputation for completing work. In such a system, pairing is an act of great bravery and potentially high risk.

Esther Derby has already said everything that needs to be said about the follies of such a system.

I worry a little about this card. I know that agile is about values, and that a primary value is that we value people. We value individuals and interactions over processes and tools. Yet sometimes the people we value need to spend some time in a system that encourages interaction and completion so that they can learn to work together more successfully. I expect this to be the set of ideas that bring us the most angry reader mail.

Yet I think that you have to change the system sometimes. So be it.